Unleash Your AI Potential: Crafting the Perfect Prompt

Want to get incredible results from AI? It's all about the prompt. This listicle delivers six actionable prompt engineering tips to help you communicate effectively with AI and unlock its full potential. Learn how to go from vague outputs to amazing results with techniques like clear instructions, structured formats, role-based prompting, few-shot learning, chain-of-thought reasoning, and iterative refinement. Whether you're exploring creative AI use cases, automating workflows with tools like Zapier, n8n, or Replit, or simply curious about prompting LLMs, these prompt engineering tips will elevate your AI game.

1. Be Clear and Specific

The cornerstone of effective prompt engineering is clarity and specificity. This is the most fundamental principle for getting what you want from an AI. Think of it like giving directions: a vague request like "Go somewhere nice" will likely lead to a different destination than "Drive 2 miles north, then turn left at the oak tree and go to the blue house on the right." Similarly, vague prompts yield unpredictable results from AI, while precisely worded prompts guide it to generate exactly what you need. Specific instructions, constraints, and explicit expectations significantly reduce the likelihood of the AI misinterpreting your request.

This approach deserves its top spot on any prompt engineering tips list because it's the foundation upon which all other techniques build. Without clear and specific instructions, even the most advanced prompt engineering tactics will likely fall flat. Features like explicit instructions, detailed context, well-defined parameters, and reduced ambiguity are all crucial components of this core principle. The benefits are numerous: more predictable outputs, a reduced need for multiple iterations (saving you valuable time!), and consistency across multiple prompts, making it easier to build reliable AI-driven workflows for everything from vibe marketing to go-to-market strategies and workflow automations.

Whether you're a hobbyist vibe builder, exploring AI use cases, or automating tasks with tools like Replit, n8n, or Zapier, being clear and specific in your prompts is paramount. Imagine using Replit for a coding project; a vague prompt might lead to a generic script, while a specific one generates the exact function you need. The same applies to automating workflows – a clearly defined prompt in Zapier ensures the correct actions are triggered every time.

Examples:

- Poor: "Write about climate change"

- Better: "Write a 500-word analysis on how climate change affects coastal cities, including three specific adaptation strategies and their implementation costs"

The difference is stark. The first prompt is open to a vast array of interpretations. The second provides clear direction on length, topic focus, and required elements.

Tips for Writing Clear and Specific Prompts:

- Define the format: Do you want an essay, a list, a table, a poem, code, etc.?

- Specify length requirements: Word count, number of items, or time duration.

- Include subject-matter constraints: Narrow down the topic to prevent the AI from straying.

- Mention the intended audience: Who is this output for? Adjusting the tone and style based on the audience is crucial.

- Clearly state the purpose: What do you want the output to achieve? Inform, persuade, entertain, etc.?

Pros:

- More predictable outputs

- Reduces need for multiple iterations

- Saves time by getting desired results faster

- Creates consistency across multiple prompts

Cons:

- Can become verbose (though this is a small price to pay for accuracy)

- Requires more thought and planning upfront

- May inadvertently restrict creative outputs if too constraining (finding the right balance is key)

This principle has been popularized and emphasized by many leading figures in the AI space, including OpenAI documentation, Anthropic's Claude best practices, and influential prompt engineering researchers like Riley Goodside. By mastering this core principle, you’ll be well on your way to harnessing the full power of AI.

2. Use Structured Formats

One of the most effective prompt engineering tips you can implement is using structured formats. This technique provides a framework that guides AI models to organize information in a specific, consistent manner. Instead of hoping the AI will present the output in a usable way, you're providing it with a blueprint. By requesting outputs in formats like JSON, markdown tables, or even simple numbered lists, you create predictable structures that are easier to parse, both for you and for any automated systems you might be using. This is especially valuable for data extraction tasks or when the AI's output needs to be processed programmatically. Think of it like giving the AI a template to fill in, ensuring the information comes out organized and ready to use.

This approach shines when dealing with tasks like building AI-powered workflows for your vibe marketing or automating go-to-market strategies. Imagine using Zapier, n8n, or Replit and needing data to flow seamlessly between different steps. Structured outputs make that integration incredibly smooth. For example, you can create a structured prompt to analyze customer feedback and output the sentiment scores as a JSON object. This JSON data can then be easily ingested by other tools in your workflow for automated reporting or decision-making. This structured approach earns its place on this list because it bridges the gap between requesting information and immediately putting that information to work.

Features of structured prompting include defined output structures, format-specific instructions, schema definitions (especially for data outputs like JSON), and templated responses. These features provide fine-grained control over how the AI delivers the information. The benefits are substantial: you get consistent and predictable outputs, which simplifies automated processing and improves the readability and organization of the response. This ultimately reduces the need for tedious post-processing of the AI-generated content.

Of course, there are a few trade-offs. Using structured formats may limit the AI’s creative expression and can be unnecessarily rigid for simple requests. The format specifications themselves also add a little complexity to your prompts.

Examples of Effective Implementation:

- Request for JSON: 'Analyze these three companies and return the data as a JSON object with keys for "company_name", "revenue", "growth_rate", and "market_position".'

- Code Generation: Tools like GitHub Copilot leverage structured formats to generate code in specific patterns or frameworks.

- SQL Queries: Creating SQL queries requires adherence to specific formatting conventions to ensure the database understands the request.

Actionable Tips for Using Structured Formats:

- Be Upfront: Specify the exact format requirements at the beginning of your prompt.

- Show, Don't Just Tell: Provide a small example of the desired format.

- Define Your Schema: For JSON, clearly describe the expected keys and data types within the schema.

- Markdown Magic: Use markdown for formatting text outputs when appropriate.

- XML for Long Outputs: Consider using XML tags to define sections in longer, more complex outputs.

The power of structured data extraction has been highlighted by individuals like Simon Willison, and resources like Brex’s Prompt Engineering Guide and the LangChain framework have further popularized this approach. Learn more about Use Structured Formats and explore how this powerful prompt engineering tip can elevate your AI interactions.

3. Leverage Role-Based Prompting

One of the most powerful prompt engineering tips you can utilize is role-based prompting. This technique involves instructing the AI to adopt a specific persona, expertise level, or professional role when generating responses. Think of it like giving the AI a costume and a script – it helps frame the context and perspective from which the AI should approach the task. This often results in a more appropriate tone, terminology, and depth of analysis, making your output much more effective for its intended purpose. This is a crucial skill for anyone exploring AI use cases, from hobbyist vibe builders to those working on go-to-market strategies and workflow automations using tools like Replit, n8n, or Zapier.

So, how does it work? By defining a role at the beginning of your prompt, you're essentially setting the stage for the AI. You're telling it, "Pretend you are this type of person with this level of knowledge, and answer the following question." This simple act can dramatically change the quality and relevance of the AI's output. Features of effective role-based prompting include clear character/role definitions, specifying expertise levels, framing the response through a professional perspective, and providing contextual behavior guidance.

Here are some examples of successful role-based prompting:

- "Act as an expert mechanical engineer and explain how hydraulic systems work in heavy machinery."

- "As a financial advisor with 20 years of experience, analyze these investment options for a risk-averse client nearing retirement."

- Even academic research, like that of Ethan Mollick, a Wharton professor researching AI, has demonstrated how role prompting improves specialized task performance. His work, along with the persona-based prompting techniques pioneered by Riley Goodside, and resources like the system message documentation in ChatGPT and Claude, have popularized and refined this technique.

Why should you use role-based prompting? Consider these benefits:

- Elicits domain-specific knowledge and terminology: Need marketing copy? Ask the AI to act as a seasoned copywriter. Want technical explanations? Have the AI embody an expert in that field.

- Creates consistent tone throughout responses: This is especially useful for longer pieces of content or when building a specific brand voice.

- Improves relevance of examples and explanations: A financial advisor persona will provide different examples than a mechanical engineer, ensuring the information is tailored to the role and context.

- Can produce more creative or specialized outputs: Need a captivating story? Ask the AI to be a novelist. Want code in a specific style? Tell it to be a senior software developer.

However, there are some potential downsides to be aware of:

- May reinforce stereotypes if not carefully worded: Be mindful of biases and avoid perpetuating harmful stereotypes in your role definitions.

- Can unnecessarily constrain the model's knowledge base: If the role is too narrow, the AI might miss relevant information outside the defined scope.

- Sometimes leads to verbose responses with role-specific filler content: You might need to add constraints like "be concise" or "avoid jargon" to keep the output focused.

Here are some actionable tips for leveraging role-based prompting:

- Define the role early in the prompt: This sets the context for the entire interaction.

- Specify the experience level or qualifications of the persona: "Entry-level," "expert," or "20 years of experience" adds nuance to the role.

- Include relevant context about who the response is for: "Explain this to a five-year-old" versus "Explain this to a PhD candidate" will yield very different results.

- Consider combining multiple expert personas for complex topics: For interdisciplinary subjects, you might ask the AI to be both a scientist and an ethicist.

- Add specific constraints to prevent unwanted role-play elements: Tell the AI to avoid excessive characterization or unnecessary dialogue.

Role-based prompting is a valuable tool for prompt engineering, allowing you to tailor the AI’s output to your specific needs. By understanding its features, benefits, and potential drawbacks, you can harness its power to create more effective and targeted content. Learn more about Leverage Role-Based Prompting for further exploration of its potential in community building and engagement. This technique is particularly useful for non-technical AI enthusiasts looking to enhance their prompting skills and explore the capabilities of LLMs in various applications, from vibe marketing to workflow automations.

4. Implement Few-Shot Learning

Few-shot learning is a powerful prompt engineering tip that allows you to guide AI models effectively by showing, rather than telling. Instead of crafting complex instructions, you provide the AI with a few examples of the desired input and output. This "learning by example" approach helps the model understand the pattern you're aiming for, whether it's a specific formatting style, a reasoning process, or even a particular tone of voice. The model then uses these examples as a template for generating new outputs when given similar inputs. This technique significantly enhances your control over the AI's output and can streamline your workflow, especially for repetitive tasks.

Think of it like teaching a child to sort shapes. Instead of explaining the abstract concepts of "square" and "circle," you show them a few examples of each. Few-shot learning works similarly with AI. It’s particularly useful for prompt engineering tips because it simplifies the process of communicating complex requirements to the model.

For instance, imagine you want to convert academic citations between different formats (e.g., APA to MLA). By providing 2-3 examples of the conversion, you can effectively teach the AI the transformation rules without explicitly defining them. This also applies to creating consistent product descriptions, writing marketing copy, or even generating code. GitHub Copilot, a popular AI-powered coding assistant, leverages few-shot learning extensively to understand coding patterns and suggest relevant code snippets based on the context.

This approach deserves a spot on this list because it bridges the gap between human intuition and AI execution. For non-technical AI enthusiasts, hobbyist vibe builders, and those exploring AI for go-to-market strategies, few-shot learning simplifies AI interaction. It makes prompting LLMs (Large Language Models) in tools like Replit, n8n, and Zapier far more intuitive, empowering users to automate workflows and generate creative content with ease.

Benefits and Features of Few-Shot Learning:

- Reduces the need for complex explanations: Show, don't tell!

- Produces more consistent outputs: Examples provide a clear template for the AI to follow.

- Handles unusual formats and styles: Easily guide the AI towards specific stylistic choices.

- Effective for specialized tasks: Ideal for technical or niche applications.

- Implicit style and format guidance: Demonstrates desired output characteristics through examples.

- Pattern recognition without explicit rules: The model learns from the provided patterns implicitly.

Pros and Cons:

- Pros: Reduces complexity, enhances consistency, handles unique styles, excels in specialized tasks.

- Cons: Requires crafting relevant examples, consumes token context space (limiting input length), may lead to overfitting (the model might struggle with inputs outside the provided examples), can be less flexible in novel situations.

Actionable Tips for Effective Few-Shot Learning:

- Use 2-5 diverse examples: This usually provides a good balance between clarity and context space.

- Vary example complexity: Include both simple and complex examples to cover a broader range of scenarios.

- Clearly separate examples from the actual request: Use clear delimiters (e.g., "---" or a specific keyword) to distinguish between the examples and the task.

- Choose representative examples: Select examples that truly reflect the full scope of the task.

- Include edge cases: For complex tasks, add examples of unusual or boundary scenarios.

Learn more about Implement Few-Shot Learning This resource can provide further insights for beginners starting their AI journey. Few-shot learning has been popularized by research such as the GPT-3 paper by Tom Brown et al., OpenAI's documentation, Andrew Ng's work on meta-learning, and vibrant prompt engineering communities on platforms like GitHub and Hugging Face. By mastering this prompt engineering tip, you can unlock the full potential of AI and create truly impressive outputs.

5. Use Chain-of-Thought Reasoning

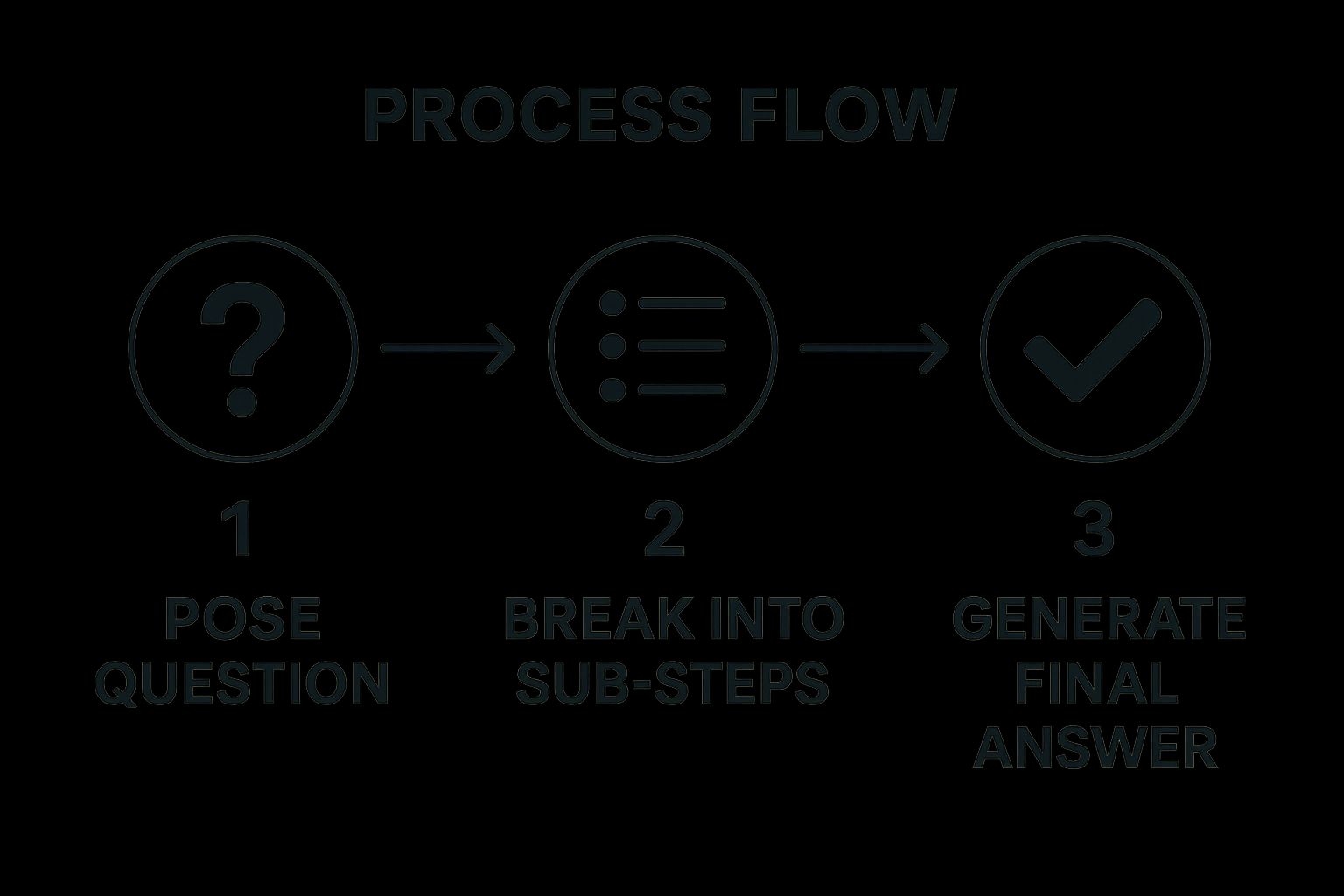

Chain-of-Thought (CoT) prompting is a powerful prompt engineering tip that encourages AI models to think step-by-step, like a human, before arriving at a final answer. Instead of just requesting a solution, you guide the AI through a logical sequence of reasoning, leading to more accurate and transparent results for complex problems. This method significantly improves the performance of large language models (LLMs) on tasks requiring logical deduction, mathematical calculations, or multi-step reasoning. This approach makes it easier to understand how the AI arrived at its conclusion, making it ideal for applications where transparency and auditability are crucial.

The infographic above visualizes the CoT process. It starts with a complex prompt, then breaks it down into smaller, manageable steps. Each step builds on the previous one, creating a chain of logical reasoning. Finally, the AI synthesizes the intermediate conclusions into a final, comprehensive answer. The visual emphasizes the iterative and logical nature of Chain-of-Thought prompting. The step-by-step breakdown not only leads to better accuracy but also makes the AI's thinking process transparent and understandable.

Imagine you're using an LLM to troubleshoot a complex bug in your Zapier workflow. Instead of simply asking "Why isn't my Zap working?", CoT prompting encourages you to ask a series of more focused questions: "What are the individual steps in this Zap?", "What data is being passed between each step?", "At which step does the expected data not match the actual data?". This breakdown helps the LLM pinpoint the exact location of the error, much like a programmer would debug their code.

Here's a breakdown of the CoT process for prompt engineering:

- Define the complex problem: Clearly articulate the ultimate goal or question you want the AI to answer.

- Decompose the problem: Break down the complex problem into smaller, more manageable sub-problems or steps.

- Prompt for each step: Create specific prompts for each sub-problem, requesting the AI to provide intermediate reasoning and conclusions.

- Synthesize the results: Combine the answers to the sub-problems to form a final, comprehensive answer to the original complex problem.

This structured approach ensures a clear, logical flow and improves the accuracy of the final result.

Pros of using Chain-of-Thought Prompting:

- Significantly improves accuracy: Especially for complex problems in math, logic, and multi-step reasoning.

- Transparent and verifiable reasoning: Makes it easier to understand and audit the AI's decision-making process.

- Error identification: Simplifies the process of finding errors in the AI's reasoning.

Cons of using Chain-of-Thought Prompting:

- Longer outputs and higher token consumption: The step-by-step reasoning requires more text, leading to increased cost.

- Unnecessary for simple tasks: Can be overkill when a straightforward prompt is sufficient.

- Potential to expose flawed reasoning: May reveal inconsistencies in the AI's logic even if it reaches the correct conclusion.

Actionable tips for implementing Chain-of-Thought prompting:

- Add "Let's think about this step by step" to your prompts.

- Ask the model to explicitly state its assumptions at each step.

- For mathematical problems, request the model to "show all work."

- Use CoT for any task involving multi-step reasoning or calculations.

- Consider including verification steps to check the solution.

Chain-of-Thought prompting earns its place in this list of prompt engineering tips due to its ability to unlock the true potential of LLMs. By encouraging deliberate, step-by-step reasoning, you can significantly improve the accuracy and transparency of AI-generated responses, opening up exciting new possibilities for AI enthusiasts, hobbyist vibe builders, and anyone looking to leverage AI for tasks like workflow automation in tools like n8n, Replit, and Zapier. Learn more about Use Chain-of-Thought Reasoning Research by Jason Wei and others at Google, along with work by Anthropic on Constitutional AI, has demonstrated the power of this technique. This approach is a game-changer for anyone looking to optimize their prompt engineering strategies and get more insightful results from LLMs.

6. Iterate and Refine Prompts

This prompt engineering tip focuses on the iterative nature of crafting effective prompts. Iterating and refining your prompts is crucial for achieving optimal results with large language models (LLMs). It's a core principle of prompt engineering because prompts aren't static instructions; they're dynamic tools that can be honed and improved over time. This process involves treating prompts as evolving artifacts, constantly refined based on the outputs they generate. Think of it like sculpting – you start with a rough idea and gradually refine it until you achieve your desired masterpiece.

This approach is essential for several reasons. First, LLMs can be unpredictable, and slight changes in wording can significantly impact the output. Second, your initial prompt may not fully capture the nuances of what you're trying to achieve. Through iteration, you can identify and address these gaps. Finally, iterative refinement allows you to adapt to the evolving nature of LLMs themselves as they are constantly being updated and improved.

How it Works:

Iterative prompt refinement involves a cyclical process of testing, analyzing, and adjusting. You begin with an initial prompt, submit it to the LLM, and evaluate the output. Based on this evaluation, you tweak the prompt, perhaps by adding more context, changing keywords, or restructuring the phrasing. Then, you submit the revised prompt and analyze the new output. This cycle repeats until you're satisfied with the results. Experimentation is key to refining your prompts. Reviewing practical examples can significantly improve your results. You can find a helpful resource with prompt engineering examples.

Features of Iterative Prompt Refinement:

- Systematic testing methodology: A structured approach to testing different prompt variations.

- Incremental improvements: Making small, targeted changes to the prompt in each iteration.

- Output analysis feedback loop: Using the LLM's output to inform subsequent prompt modifications.

- Version tracking of prompt variations: Keeping a record of all prompt versions and their corresponding outputs.

Pros:

- Leads to progressively better results over time.

- Helps identify and address edge cases.

- Creates robust prompts that work consistently.

- Builds a deeper understanding of model behavior.

Cons:

- Can be a time-consuming process.

- May require significant experimentation.

- Benefits may plateau after multiple iterations.

- Can potentially lead to overly complex prompts.

Examples of Successful Implementation:

- OpenAI's iterative development of RLHF (Reinforcement Learning from Human Feedback) demonstrates how repeated refinement can lead to significant improvements in model performance.

- Anthropic's constitutional AI approach evolves through iterations, showcasing how ethical considerations can be integrated into prompt engineering through refinement.

- Enterprise prompt development workflows at companies like Brex and Scale AI highlight the practical application of iterative prompt engineering in real-world scenarios.

Tips for Iterative Prompt Refinement:

- Keep a detailed record of all prompt versions and their outputs. This allows you to track progress and revert to previous versions if necessary.

- Change only one element of the prompt at a time to isolate the effects of each modification.

- Test prompts across different scenarios and edge cases to ensure robustness.

- Consider creating A/B tests for important prompts to compare the performance of different variations.

- Document why certain changes improved performance to build your understanding of effective prompt engineering techniques.

When and Why to Use This Approach:

Iterative prompt refinement is valuable whenever precision and reliability are crucial. It's especially useful for tasks requiring complex outputs, such as creative writing, code generation, or detailed explanations. While it might be more time-consuming than a "one-and-done" approach, the resulting improvement in output quality makes it a worthwhile investment for serious prompt engineers and hobbyists alike. Learn more about Iterate and Refine Prompts for further discussion on handling model drift over time, which is a key aspect of why iteration is so important. By embracing this iterative process, you can unlock the full potential of LLMs and consistently generate high-quality outputs tailored to your specific needs.

6 Key Prompt Engineering Tips Comparison

| Technique | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Be Clear and Specific | Low to Medium – requires thoughtful planning | Low – mainly effort/time | More predictable, consistent outputs | General purpose, any prompt needing clarity | Reduces ambiguity, faster desired results |

| Use Structured Formats | Medium – need to define schemas/formats | Medium – requires format knowledge | Consistent, machine-parseable outputs | Data extraction, automated processing | Facilitates automation, reduces post-processing |

| Leverage Role-Based Prompting | Medium – defining roles and expertise | Low to Medium – some context needed | Domain-specific, relevant tone and depth | Specialized knowledge tasks, tone-sensitive | Improves relevance, consistent voice |

| Implement Few-Shot Learning | Medium to High – creating quality examples | Medium – token budget for examples | Pattern-following, consistent stylistic outputs | Complex formats, specialized or technical tasks | Reduces need for complex instructions, consistent style |

| Use Chain-of-Thought Reasoning | Medium – prompt design is more complex | Medium – longer outputs consume tokens | Higher accuracy on complex reasoning | Multi-step problems, math, logic, decision making | Improves accuracy, transparent reasoning |

| Iterate and Refine Prompts | High – continuous testing and revision | Medium to High – time and experimentation | Progressive improvement, robust prompts | All scenarios needing optimized results | Builds deeper model understanding, handles edge cases |

Level Up Your AI Game with VibeMakers

Mastering prompt engineering is more than just a skill—it's the key to unlocking the true potential of AI. Throughout this article, we've explored six essential prompt engineering tips, from being clear and specific to leveraging advanced techniques like chain-of-thought reasoning. By implementing these strategies, you’ll transform from a casual user to a true AI conductor, orchestrating powerful outputs across diverse applications, including vibe marketing, workflow automation, and go-to-market strategies. Remember those key takeaways: structured formats, role-based prompting, few-shot learning—these are the building blocks of effective prompting. The more you practice and refine your prompt engineering tips, the more effectively you'll be able to communicate your vision to the AI and achieve truly remarkable results. This understanding empowers you to not just use AI, but to shape and mold it to your specific needs, whether you're building in Replit, n8n, Zapier, or other platforms.

Ready to elevate your prompt engineering journey and unlock the full power of AI? VibeMakers provides cutting-edge tools and resources, along with a vibrant community of AI enthusiasts, to help you master these essential prompt engineering tips. Visit VibeMakers today and start creating the future of AI, together.